Ignition systems must light mixtures reliably across cold starts, idle EGR, high-rpm, and boosted full-load. Coil‑on‑plug (COP) architectures shorten the high-voltage path and allow per‑cylinder control, but success hinges on precise dwell management, suitable spark plugs, and robust diagnostics. Under boost, higher in‑cylinder pressure pushes breakdown voltage and energy demand up, stressing coils and plugs. Modern ECUs juggle battery voltage, temperature, lambda, and rpm to maintain spark energy without overheating coils. Meanwhile, on‑board misfire detection protects emissions hardware and guides service. This overview links COP hardware, dwell strategy, plug design, boosted ignition needs, and misfire monitoring into a coherent picture.

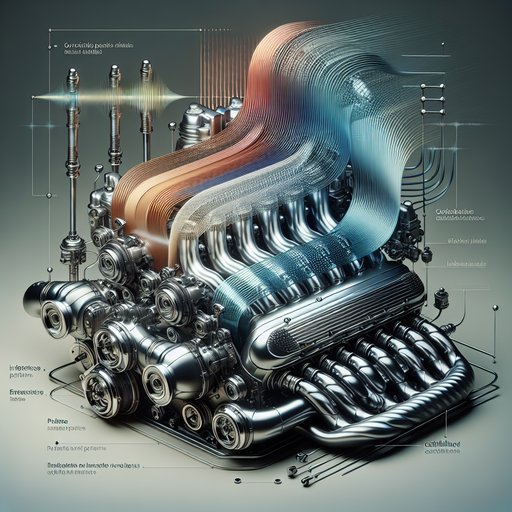

The core ignition challenge is delivering adequate spark energy at the right time into a dense, fast‑moving, often diluted mixture. Coil‑on‑plug systems place a dedicated step‑up transformer on each plug, eliminating long secondary leads and their capacitive losses. That increases delivered energy, reduces electromagnetic interference, and enables cylinder‑specific timing, dwell, and even multi‑strike strategies to stabilize idle or lean combustion. The governing idea is simple: a coil stores energy in its magnetic field during dwell and releases it across the plug when the driver opens.

The ECU must tailor dwell to achieve a target primary current without saturating the core or overheating windings. Because dI/dt ≈ V/L, battery voltage and coil inductance set how fast current rises. With a typical COP (L ≈ 2–4 mH), at 14 V the current ramp is ~3.5–7 A/ms; reaching 10 A takes ~1.5–3 ms. At 9 V (cold crank), the same coil needs ~2.5–4.5 ms.

Stored energy E ≈ ½·L·I^2: L = 3 mH and I = 10 A yields ~150 mJ in the primary; 20–60 mJ is commonly delivered to the secondary arc depending on losses and burn duration. Dwell control thus adapts to battery voltage, rpm (available coil charge time per cycle), coolant/coil temperature (to protect against >120–140 °C coil body temps), and combustion conditions. At low rpm and light load, some ECUs add multi‑strike (2–4 sparks within ~1–4 ms) to improve kernel formation with high EGR or lean lambda (e.g., λ 1.1–1.4). At high rpm, available dwell shrinks; strategy prioritizes a single strong pulse.

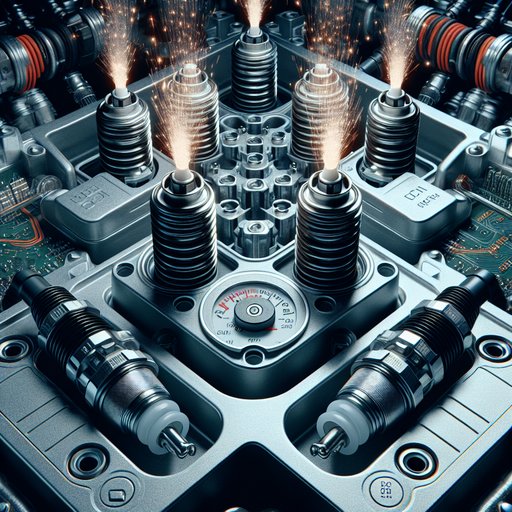

Typical burn times are 0.8–1.6 ms at stoichiometric, longer for lean. Breakdown voltage at a 0.7–0.9 mm gap is ~8–12 kV near 1–2 bar, rising to ~20–35 kV when pressure at spark is 15–25 bar as in boosted operation. Spark plugs with precious‑metal fine‑wire center electrodes (iridium or platinum) materially lower required firing voltage and resist erosion. Iridium (melting point ~2446 °C) allows 0.4–0.6 mm center electrodes that concentrate the electric field, reducing breakdown voltage and quench.

Platinum (~1768 °C) is often used as a pad on the ground strap to curb recession. Typical resistor values are 3–5 kΩ to limit RFI. Heat range selection targets tip temperatures ~450–850 °C to avoid fouling (too cold) or pre‑ignition (too hot). Gaps of 0.6–0.9 mm are common; boosted, high‑pressure applications may run the lower end of that range to keep required kV within coil capability without sacrificing kernel growth.

Under boost, ignition demands climb because higher density and pressure increase breakdown voltage and shorten the early flame kernel’s laminar growth time. Even though many turbo gasoline engines run slightly rich at WOT (e.g., λ 0.80–0.90, AFR ~11.5–13.0:1) which helps ignitability, the elevated pressure dominates the voltage requirement. Practical coil targets are 60–120 mJ stored, 20–70 mJ delivered, with 10–14 A primary current and 2–4 ms dwell at 13.5–14 V. Sufficient secondary voltage headroom (35–40 kV) and robust insulation are needed to avoid flashover.

With high EGR (15–25% mass fraction) or stratified lean combustion (λ > 1.3), longer burn time and sometimes multi‑strike improve stability. Excessive gap growth (erosion) and carbon tracking raise misfire risk; service intervals reflect this, with iridium/platinum often specified for 90–160 thousand km. Misfire diagnostics use crankshaft speed fluctuation as the primary signal. The ECU divides the cycle into segments tied to each cylinder’s power stroke and looks for short‑term decelerations after combustion should occur.

Per‑cylinder counters maintain misfires over 200–1000 revolutions; thresholds differentiate emissions‑relevant rates (e.g., ~2–3% per OBD requirements) from catalyst‑damaging rates (e.g., >10% triggers a flashing MIL). Supplemental methods include ion‑sense current measurement through the plug (where equipped) and inferred detection via oxygen sensor oscillations, catalyst temperature rise, or exhaust pressure pulsations. In service, secondary waveform analysis shows dwell ramp slope (V/L), firing kV, and burn time; a rising spark line can indicate lean/dilute mixtures, while very short burn suggests high pressure, excessive gap, or weak coil. Implications: COP reduces energy loss and improves timing authority, aiding cold starts, lean EGR limits, and knock tolerance.

Proper dwell control prevents coil overheating and maintains consistent spark at low voltage. Iridium/platinum fine‑wire plugs cut firing voltage and extend life, especially valuable under high boost pressures and EGR. Sizing gap and coil headroom to meet ~20–35 kV breakdown under load preserves reliability. Accurate misfire detection protects catalysts and keeps emissions within standards like Euro 6/7, while guiding maintenance.

For drivers, the net effect is stable idle, clean transients, and robust torque delivery without stumble even under high boost.